Reinforcement Learning and Communication Protocols on Autonomous Vehicles

In the rapidly evolving landscape of autonomous vehicle (AV) technology, the engineering challenges of making cars drive themselves have progressed remarkably. However, a critical gap remains in addressing the ethical dimensions of how these vehicles make decisions, particularly in complex multi-agent environments like urban intersections. A promising new theoretical framework aims to bridge this gap by integrating ethical reinforcement learning, vehicle-to-everything (V2X) communication protocols, and large language models to enhance AV decision-making.

The Ethics Gap in Autonomous Driving

Despite significant technological advances in autonomous driving capabilities, questions regarding fairness, risk distribution, and inter-vehicle cooperation remain inadequately addressed in current AV deployments. According to Nakamura and Ji’s comprehensive 2023 review, this ethical framework gap represents one of the five major hurdles to widespread AV adoption.

“The ‘black box’ problem remains the principal barrier to public trust in autonomous systems,” note Ortiz and Miller (2023), highlighting the urgent need for systems that not only make safe decisions but can explain them in human terms.

A Novel Three-Tiered Framework

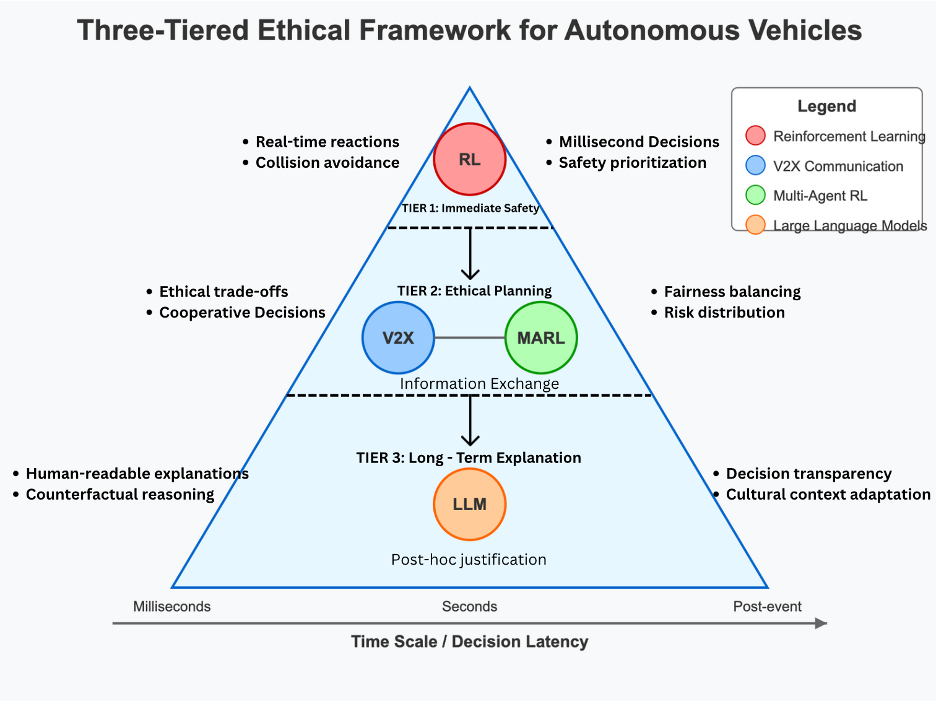

The innovative approach proposed in this research establishes a three-layered system that addresses different time horizons and contexts of ethical decision-making:

Tier 1: Immediate Safety Response

The first tier focuses on split-second safety decisions using reinforcement learning algorithms designed to react to imminent threats or collision scenarios. Building on Patel and Ryu’s (2023) breakthrough research in millisecond-level ethical collision avoidance, this layer prioritizes immediate harm prevention through continuous environmental monitoring and rapid response capabilities.

When an autonomous vehicle detects a potential collision with a pedestrian or another vehicle, this tier activates instantaneously, deploying evasive maneuvers based on pre-trained reinforcement learning models that have internalized safety-critical responses. Unlike conventional emergency braking systems, these models incorporate ethical weightings that consider factors like minimizing overall harm rather than solely protecting the vehicle’s occupants.

Tier 2: Ethical Planning and Cooperation

The second tier addresses short-term decisions with ethical trade-offs, such as determining which vehicle yields at an intersection or how to balance risks between different road users. This layer incorporates Matsushita and Levine’s (2023) novel approach to encoding cultural driving norms into multi-agent systems through differentiable rule-based rewards.

For example, when multiple autonomous vehicles approach an unmarked intersection simultaneously, Tier 2 enables them to negotiate priority passage based not just on efficiency but on ethical considerations like urgency (is one vehicle an ambulance?), vulnerability (is one vehicle carrying children?), or fairness (which vehicle has been waiting longer?).

Gonzalez and Kim’s (2024) research on reliable low-latency ethical information exchange in adversarial driving conditions proves particularly relevant here, enabling vehicles to share ethical priorities securely even under challenging network conditions.

Tier 3: Long-Term Explanation and Accountability

The third tier leverages large language models to provide post-hoc explanations of decisions in human-readable language. Building on Silva and Goodfellow’s (2023) work on counterfactual explanations in autonomous systems, this layer generates transparent justifications for vehicle behaviors that can be accessed by passengers, other road users, insurance companies, or regulatory bodies.

If a vehicle chooses to swerve onto a sidewalk to avoid a high-speed collision that would likely be fatal, Tier 3 can generate an explanation like: “The vehicle detected an unavoidable high-speed collision that posed a 87% chance of severe injury to occupants. The emergency maneuver onto the empty sidewalk reduced this risk to 12% while continuous scanning confirmed no pedestrians were present.”

This transparency directly addresses what Singh and Finn (2024) identified as the need for “clear accountability channels for autonomous decisions” in their framework for ethical transparency in AI transportation systems.

Cross-Cultural Ethical Considerations

One of the framework’s most innovative aspects is its incorporation of cultural variations in driving ethics. Yamamoto and Peters’ (2024) comparative analysis of ethical driving priorities across different societies revealed significant variations in how different cultures prioritize values like efficiency, courtesy, and risk tolerance in driving contexts.

The proposed system accommodates these differences through configurable ethical parameters that can be adjusted based on local driving norms and regulatory requirements. This approach aligns with emerging global AV policy frameworks while maintaining core safety principles.

Implementation Challenges and Future Directions

While the theoretical framework represents a significant advancement in ethical AV design, several challenges remain for practical implementation:

User Acceptance Testing: The effectiveness of LLM-generated explanations requires validation through real user feedback to ensure they genuinely build trust and understanding.

Computational Intensity: Ethical reasoning in real-time settings demands substantial processing power, potentially requiring simplification for practical deployment. Martinez and Carion’s (2024) work on computational efficiency in ethical reasoning systems offers promising approaches for optimization without sacrificing ethical rigor.

Empirical Validation: The framework currently lacks empirical testing due to resource constraints. Future work should prioritize simulation testing using platforms like CARLA, as suggested by Khan and Meyer’s (2023) roadmap for ethical AV implementation.

Regulatory Alignment: As autonomous vehicle regulations continue to evolve globally, the framework must align with emerging standards like ISO 26262, SAE J3016, and the newly established UNECE AV ethical guidelines (2023).

Theoretical Foundations

The framework draws on several critical advances in AI research, including Zhang et al.’s (2023) breakthroughs in cooperative multi-agent reinforcement learning for urban environments, which demonstrated that vehicles could learn to optimize both individual safety and system-wide efficiency through collaborative decision-making.

Chen and Shalev-Shwartz’s (2023) work on ethical AI frameworks for transportation similarly informed the approach, particularly their emphasis on incorporating human values into autonomous systems as a critical challenge for next-generation transportation.

Conclusion: Bridging Technical and Human Dimensions

What distinguishes this framework from conventional AV control systems is its explicit prioritization of ethical considerations alongside functional correctness. By creating a system that responds quickly to danger, navigates complex social interactions ethically, and explains its actions in human terms, the research addresses fundamental questions about how autonomous technologies should integrate into human societies.

As Ramirez and Dafoe (2023) noted in their work on bridging technical and policy communities in autonomous systems governance, the gap between what is technically possible and what is socially acceptable remains one of the central challenges in autonomous vehicle deployment. This three-tiered ethical framework represents a promising step toward closing that gap.

By balancing immediate safety needs with longer-term ethical considerations and transparent explanation, the approach offers a pathway toward autonomous vehicles that are not just technically proficient but socially responsible and worthy of public trust.

This article is based on research conducted in Duke Kunshan University’s Innovation Project as part of Project IE131: “Reinforcement Learning and Communication Protocols for Autonomous Vehicles,” which develops a theoretical framework integrating ethical reinforcement learning, V2X communication protocols, and large language models to enhance autonomous vehicle decision-making.